What is Spark?

Have you heard of Hadoop?

If yes, what does it provide to us for data processing?

Hadoop Provides Us

- HDFS - Storage

- Map Reduce - Computation/Processing

- Yarn - Resource Management

What is Spark Cluster?

Spark Cluster is the collection of master and slave nodes.

What is Node?

It can be considered as a resource with its own computing capabilities.

Spark is not a replacement rather it is an alternative to Map Reduce.

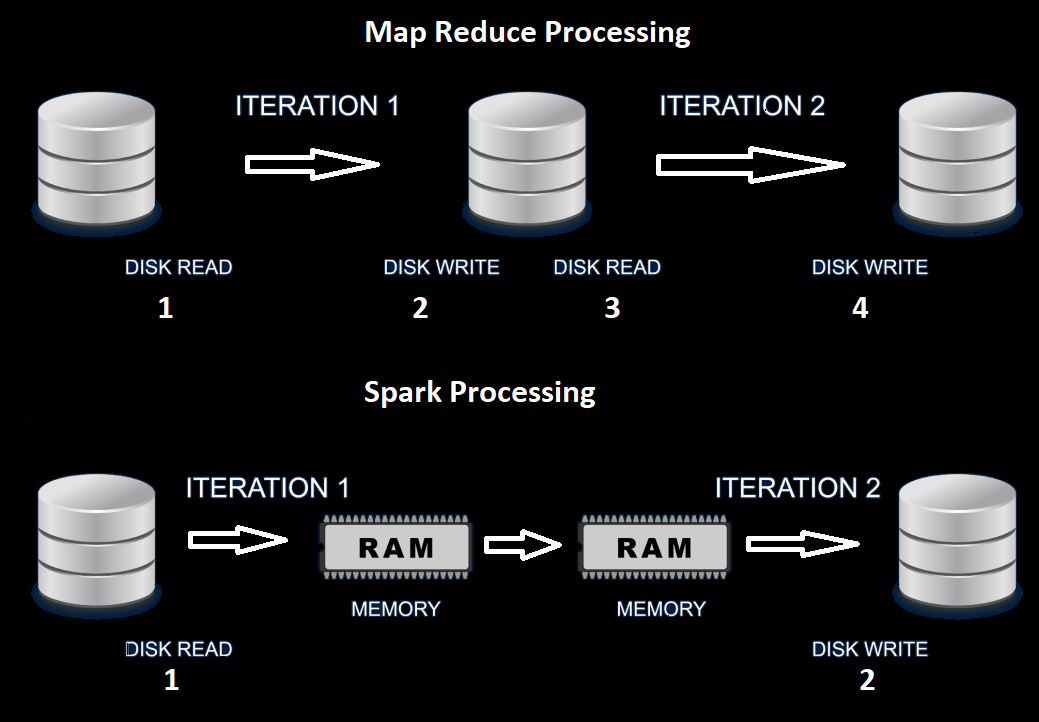

Why is Spark faster than Map Reduce?

MapReduce involves

more

DISK read and write

operations than Spark.SPARK provides low latency because it involves less DISK read and writes operations.